The method for installing Docker on AlmaLinux 9.3 is essentially the same as for CentOS 8 and later. AlmaLinux is designed as a successor to CentOS, and many tasks related to package management and system administration can be performed in a similar manner. We’ll follow the steps outlined on the official website for installation:

What is AlmaLinux?

AlmaLinux is a free, open-source Linux operating system (OS). Linux, like Windows or macOS, is one of the operating systems used to run computers. AlmaLinux is a type of Linux that is particularly popular on servers, trusted by companies and developers alike.

Key Features of AlmaLinux

Successor to CentOS: AlmaLinux was introduced as the successor to the once-popular CentOS OS. After CentOS reached its end of life, AlmaLinux was created to provide a similarly stable Linux alternative.

Free to Use: AlmaLinux is available for anyone to download and use for free. There are no licensing fees, and it can be used for commercial purposes.

Stable and Long-Term Support: AlmaLinux is supported for an extended period (up to 10 years), making it a reliable choice for critical systems like servers.

Compatibility with CentOS and RHEL (Red Hat Enterprise Linux): AlmaLinux is almost identical to CentOS and RHEL, allowing users to easily switch from those operating systems to AlmaLinux. System administrators and developers can continue using familiar commands and configurations, which is a significant advantage.

When Should You Use It?

AlmaLinux is often used as a server operating system. For instance, it can be installed on computers (servers) that host websites or run applications, providing a stable, long-term solution.

Is AlmaLinux Difficult to Use?

At first, it may require some getting used to, but there are plenty of guides and tutorials available online. With just a bit of practice and knowledge of basic commands, even beginners can use AlmaLinux effectively.

AlmaLinux is a free, stable Linux operating system.

As the successor to CentOS, it has gained the trust of many users.

It is commonly used on servers and offers long-term support, making it a safe choice for critical systems.

Compared to Windows or macOS, it might feel a bit more challenging at first, but once mastered, it becomes a powerful tool!

Step 1: Installing Docker Using a Repository

First, you need to configure the repository (a location from which you can download software) to install Docker.

1. Install Required Packages

To configure the repository, you need to install a package called yum-utils. This package includes a useful tool called yum-config-manager, which helps in setting up repositories.

sudo yum install -y yum-utilsBy using the sudo command, you can install the package with administrator privileges, which is necessary when making changes to the system.

2. Add the Docker Repository

Next, you will add Docker’s official repository to enable easy downloading and installation of Docker.

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repoStep 2: Installing Docker

Once the repository is configured, the next step is to install Docker.

1. Install Docker

You can install Docker along with additional tools (such as containerd and Docker Compose) using the following command:

sudo yum install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

Explanation of the Command:

- docker-ce: The main Docker package

- docker-ce-cli: A tool for controlling Docker from the command line

- containerd.io: A tool required by Docker to manage containers

- docker-buildx-plugin and docker-compose-plugin: Plugins for managing multiple containers

During installation, you may be prompted to verify a GPG key. This is used to ensure the authenticity of the software. If the fingerprint (a code made up of letters and numbers) matches 060A 61C5 1B55 8A7F 742B 77AA C52F EB6B 621E 9F35, select “OK” to proceed.

2. Start Docker

Simply installing Docker isn’t enough—you need to start it:

sudo systemctl start dockerRunning this command will start Docker, allowing you to create and manage containers.

Additional Explanation: Setting Up Groups

When you install Docker, a group called docker is automatically created. However, after the initial installation, users are not added to this group by default. This means you will need to prepend sudo to every Docker command. If you find this inconvenient, you can add your user to the docker group, but that’s a topic for later.

- First, install

yum-utilsand configure the repository. - Next, install Docker and additional tools.

- Finally, start Docker to make it ready for use.

Why Do I Need to Use sudo?

By default, when using Docker, you need to run commands with sudo. This is because Docker operates through a Unix socket, which is managed by the root user (administrator). In other words, regular users cannot access this socket unless they use sudo to gain elevated privileges.

However, having to type sudo every time can be cumbersome, right? In the next section, we will explain how to use Docker without sudo.

Using Docker Without sudo

1. Create the Docker Group and Add Yourself to It

To use Docker without sudo, you need to create a group for Docker and add yourself to it. This will allow your user to access the socket Docker uses, enabling you to run Docker commands without needing to prepend sudo.

1.1 Create the Docker Group

First, create a group named docker. Run the following command:

sudo groupadd dockerThis command creates a new group called docker. If the group already exists, you might see the following message:

groupadd: group ‘docker’ already exists

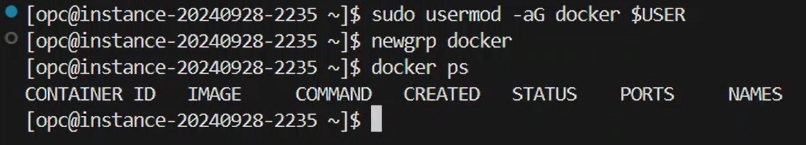

1.2 Add Yourself to the Docker Group

Next, add your user to the docker group by running the following command:

sudo usermod -aG docker $USERThis command adds the currently logged-in user to the docker group. $USER automatically replaces with your username.

1.3 Log Out and Log Back In

After adding your user to the group, you need to log out and log back in for the new group settings to take effect.

1.4 Apply Group Changes Immediately (Optional)

If you want to apply the changes without logging out, you can switch to the docker group using the following command:

newgrp dockerThis will immediately apply the changes, making the new docker group active.

Security Considerations

When you add a user to the docker group, that user gains root-level privileges. This allows for powerful operations, so you should be aware of the associated security risks before proceeding.

What to Do If Docker Doesn’t Work Without sudo

If you still encounter errors when running Docker commands without sudo, it’s possible that file permissions were changed when Docker was previously run with sudo. If you see an error like this:

WARNING: Error loading config file: /home/user/.docker/config.json –

stat /home/user/.docker/config.json: permission denied

This means the permissions for Docker’s configuration files are incorrect. You can fix this by running the following commands:

sudo chown "$USER":"$USER" /home/"$USER"/.docker -R

sudo chmod g+rwx "$HOME/.docker" -RThese commands correct the permissions for the .docker directory and its files.

Starting Docker Manually

After installing Docker, the service may not start automatically by default. You can manually start Docker by following these steps.

Checking the Status of Docker

First, use the systemctl command to check if Docker is running:

sudo systemctl status dockerIf the result shows “inactive (dead),” it means that the Docker service is not running yet.

Starting Docker

In that case, you can manually start the Docker service with the following command:

sudo systemctl start dockerThis will start Docker, making it ready to run containers.

Setting Docker to Start Automatically

Finally, here’s how to configure Docker to start automatically every time your computer boots up. This is done using a system called systemd. Run the following commands to enable this setting. If you don’t enable this, you may encounter the following error:

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

sudo systemctl enable docker.service

sudo systemctl enable containerd.serviceWith these commands, Docker will automatically start upon reboot. If you want to disable this setting later, you can use the following commands:

sudo systemctl disable docker.service

sudo systemctl disable containerd.service

sudo systemctl disable docker.service

sudo systemctl disable containerd.serviceKey Points Recap:

- To use Docker without

sudo, create thedockergroup and add yourself to it. - Changes to group memberships can be applied by either logging out and back in or using the

newgrpcommand. - If you encounter file permission issues, you can fix them with specific commands.

- To set Docker to start automatically, use the

systemctl enablecommands.

Why Doesn’t Docker Start Automatically on AlmaLinux?

The reason Docker doesn’t start automatically after installation on AlmaLinux is that Docker and its related services are not set to start automatically (enabled) by default in the system.

On Ubuntu, many packages are automatically configured to start services upon installation and remain enabled after reboot. However, on AlmaLinux and other Red Hat-based distributions (such as CentOS or Rocky Linux), you may need to manually enable services after installation.

Role of sudo systemctl enable:

The commands sudo systemctl enable docker.service and sudo systemctl enable containerd.service ensure that Docker and Containerd services will start automatically at system boot.

Without this configuration, you’ll need to manually start the services after every reboot.

Why Doesn’t Docker Start Automatically on AlmaLinux?

Differences in Distributions

AlmaLinux (Red Hat-based) differs from Ubuntu in how it handles services during package installation. In AlmaLinux, services are not always automatically enabled upon installation, which means you’ll need to manually use the enable command to activate them.

Differences in Service Management

Due to differences in package design and installation procedures, AlmaLinux often requires users to explicitly enable service auto-start. Once this is done, Docker and Containerd should automatically start after a system reboot, eliminating the need to start services manually each time.

Impact of Using AlmaLinux as a Host

AlmaLinux is a Red Hat-based Linux distribution, meaning its package management and system structure differ from Debian-based systems. However, container technology (especially Docker) is designed to run independently of the host OS. This means that running Debian-based containers on an AlmaLinux host should not pose a problem.

That said, there are a few key points to consider:

1. Differences in Package Management

Inside the container, package management is done using apt or dpkg, while on the host side, you’ll use dnf or yum. This means you will need to use different commands on the host and inside the container, which may require some adjustment.

2. File System and Permission Management

There can be differences in file ownership and permission settings between the host and the container. For instance, AlmaLinux hosts may lack the www-data user found in Debian-based containers, which could lead to permission mismatches. In such cases, you may need to adjust settings manually.

3. Troubleshooting

Occasionally, issues may arise due to differences in dependencies or library versions between AlmaLinux and Debian-based containers. If this happens, you’ll need to examine the container’s logs or error messages and find the appropriate solution.

In conclusion, while running Debian-based containers on an AlmaLinux host generally poses no major issues, some manual adjustments may be required for file permissions or package management. If you want to minimize potential issues, you could unify your environment by using Ubuntu as the host, but with proper Docker usage, AlmaLinux should work just fine.

Containers Running as Root

When containers run as root, the fact that the host OS is AlmaLinux shouldn’t cause any major issues. This is because operations inside the container are isolated from the host, and the software and package management within the container do not directly depend on the host OS. Below, we’ll explain more about running containers as root.

Key Points When Running Containers as Root

Container Independence

Docker containers operate in environments isolated from the host. Therefore, even if you run operations as root inside the container, the impact on the host is minimal. Whether the host OS is AlmaLinux or another Linux distribution doesn’t matter much, and operations within a Debian-based container should work without issue.

Mitigating File Permission Differences

If the container is running as the root user, file ownership or permission issues between the host and container are rarely a problem. The root user typically has access to all files and directories, so permission-related issues are minimized.

Security Considerations

Running containers as root comes with security risks. If a root-level container is compromised, it could have significant impacts on the host OS. For enhanced security, it’s recommended to consider using rootless mode or other methods to limit user permissions.

Host Impact

Even if there are issues with permission management or file ownership on the host side, containers running as root are less likely to be affected. However, when sharing files between the host and the container (e.g., through volume mounts), you may need to adjust permissions manually to account for differences between root-based operations.

Common Root-Based Containers

While most Docker containers run as the root user by default, certain types of containers are especially common for root-based operations. Here are a few examples:

1. Web Server Containers (e.g., Apache, Nginx)

Example images: httpd, nginx

Many web server containers start as root, though the web server itself may switch to a non-root user like www-data after startup. Nevertheless, running as root initially is typical for web server containers.

2. Database Server Containers (e.g., MySQL, PostgreSQL)

Example images: mysql, postgres

Database server containers are typically configured as root during initial setup, although the application may later shift to a dedicated user. Unless you change the configuration, root-level operations remain possible.

3. Containers Requiring Package Installation or Custom Setup

Example images: ubuntu, debian, almalinux

General-purpose OS images, like those based on Ubuntu or Debian, are often operated as root. Root access is required when installing packages or configuring the system, so root-based operations are typical.

4. CI/CD Pipeline Containers

Example images: jenkins, gitlab-runner

Containers used for CI/CD tools (e.g., Jenkins or GitLab Runner) often require elevated privileges for tasks like building or deploying, so they frequently operate as root.

5. Development and Testing Containers

Example images: node, python, ruby

Development containers (such as those for Node.js or Python) are often run as root because full access to the system is sometimes needed during development. Developers can install packages or modify system settings using root privileges.

6. System Management or Monitoring Tools Containers

Example images: prometheus, grafana, zabbix

Monitoring tools or system management containers may also run as root because they need access to system resources or configuration settings.

Pros and Cons of Running Containers as Root

Pros

- Full access to all system resources, allowing for greater customization and configuration.

- Easier management, as root privileges reduce issues related to file permissions.

Cons

- Higher security risks. Running containers as root, especially when exposed to the internet, can be very dangerous.

- Mistakes or vulnerabilities could potentially affect the host OS.

Alternatives: Rootless Mode

If you are concerned about security, you can run Docker containers in rootless mode, which allows you to operate Docker without root privileges. This is especially useful in production environments where security is a top priority.

While many containers run as root by default, it’s common for web servers, database servers, CI/CD tools, and other systems to operate in root-based environments. Running containers as root offers convenience, but it’s important to consider security risks, and in some cases, using rootless mode may be preferable.

When I tried it myself, Drupal worked even with mounts under the root user, but WordPress didn’t work as well. Drupal tends to be more flexible, especially when it comes to file systems and permissions, whereas WordPress might be a bit stricter in this regard.

Differences in Permission Settings Between Drupal and WordPress

Drupal: Drupal can sometimes operate with directories that have root-level permissions during installation or operation. This is especially true when managing modules or themes, where Drupal is flexible enough to avoid issues, even when running under root privileges.

WordPress: On the other hand, WordPress expects the web server (typically Apache or Nginx) to run under users like www-data or apache. If file ownership remains with root, permission errors may occur. Therefore, if file ownership is not set correctly, it can cause problems with WordPress’s functionality.

What You Can Learn with AlmaLinux

Basic System Administration

By manually starting and enabling services, you can gain a deeper understanding of what happens within a Linux system. For example, you can learn how to manage services using the systemctl command and how to check service dependencies, gaining foundational knowledge of server operations.

Security and Permission Management

Red Hat-based distributions place a strong emphasis on security. By learning about SELinux (Security-Enhanced Linux), file ownership, and permission settings, you can improve your server’s security management practices.

Package Management

Using AlmaLinux’s dnf or yum package managers, you can learn how to manage dependencies and updates to maintain system stability. This is especially important in enterprise environments, where proper package management is critical.

Gaining Experience in Enterprise Environments

Since AlmaLinux is compatible with Red Hat-based distributions widely used in enterprise environments, it offers a learning environment similar to those systems actually being run in businesses. This helps you acquire practical skills that are directly applicable to real-world situations.

AlmaLinux is well-suited for learning more in-depth concepts like system administration, security, and package management. It provides an excellent environment for gaining a solid understanding of the fundamentals of Linux.

In contrast, distributions like Ubuntu are beginner-friendly and easier to handle, making them convenient for initial familiarity. However, gaining experience with a more hands-on distribution like AlmaLinux can help you develop more advanced skills.

To deepen your understanding, a slightly more involved distribution like AlmaLinux might be a good choice.