VALL-E X Installation Guide for Multilingual Text-to-Speech and Speech Cloning

Install VALL-E X for multilingual text-to-speech synthesis and speech cloning. Before we begin, we will explain the differences between a Python virtual environment and Docker.

You cannot install CUDA directly within a Python virtual environment (venv); CUDA is a system-level component and must be installed on the host system.

The Python virtual environment is used to isolate Python packages and their dependencies, but it cannot be used to install or manage system-level software or drivers such as CUDA.

To use CUDA, the following steps must be taken:

Install CUDA on the host system:

Follow the CUDA Toolkit Installation Guide to install CUDA on the host system. After installation, you will be able to access the CUDA Toolkit and its dependencies by setting the environment variables appropriately.

Create a Python Virtual Environment:

Create a Python virtual environment on the host system where CUDA is installed.

Install CUDA-supporting packages in the virtual environment:

Install Python packages that utilize CUDA (e.g., TensorFlow, PyTorch, etc.) within the virtual environment.

Verify that CUDA is functioning properly:

Use a Python script or notebook to verify that CUDA is working properly. This step can be performed using the test scripts provided in the official documentation of the specific deep learning framework.

Once this process is complete, CUDA can be used from within the virtual environment.

Docker uses WSL 2 as its backend, and containers running on a Linux distribution have access to that distribution’s resources and functionality. Therefore, by installing CUDA on a Linux distribution, applications in Docker containers will also be able to take advantage of CUDA’s capabilities.

You can proceed with the following steps:

Install a Linux distribution on WSL 2:

First, install the appropriate Linux distribution on WSL 2.

Install CUDA on the Linux distribution:

Next, install CUDA on the Linux distribution.

Set up Docker in WSL 2 mode:

Install Docker Desktop for Windows and enable WSL 2 mode.

Create a Docker container:

Create a Docker container based on the appropriate Docker image for your project.

Deploy a CUDA-enabled application to the container:

Deploy a CUDA-enabled application to the container and run the application using CUDA functionality.

Note that in order to use CUDA, the Docker container must be able to access the GPU. This can be achieved using Docker’s --gpus option (available in Docker 19.03 and later).

Python Virtual Environment (venv)

- Purpose: Isolate Python packages and their dependencies on a per-project basis.

- Isolation level: Python packages and their versions.

- Use Case: Manage different package versions in different Python projects.

- Setup: Relatively easy to set up.

Docker Container

- Purpose: Isolate an application and all of its dependencies (including the OS, system libraries, environment variables, etc.) to create a portable container.

- Isolation level: Encompasses the entire environment within the container, down to the OS level.

- Use Case: Complete control over the application’s environment, ensuring the same behavior on any system.

- Setup: Building and managing the environment can be somewhat complex.

Using CUDA in the Python Virtual Environment:

The Python virtual environment can utilize host system resources (e.g., CUDA drivers), but it cannot include these system-level dependencies within itself.

Using CUDA with Docker:

Docker containers can utilize CUDA environments installed on the host system or on a WSL 2 Linux distribution. It is also possible to include specific NVIDIA GPU drivers and CUDA versions within the container.

These are the differences between Python virtual environments and Docker. Proceed with the installation of VALL-E-X using the following GitHub repository as a reference:

This time, since we do not have the CUDA toolkit installed on Windows 11, we will use the Python virtual environment to set up the system. First, we will build the conditions for VALL-E-X to work as described on GitHub.

Install with pip, recommended with Python 3.10, CUDA 11.7 ~ 12.0, PyTorch 2.0.

I downloaded CUDA Toolkit 11.8 from the following page and installed it on Windows 11. I chose this version because I see 11.8 referenced frequently.

Next, create a clone in the appropriate folder.

git clone https://github.com/Plachtaa/VALL-E-X.gitIf you receive an error like:

'git' is an internal or external command,

It is not recognized as an operable program or batch file.it means you have not installed the Windows version of git. Download and install it from the following page:

Launch Git CMD and type the following commands:

cd youtube

git clone https://github.com/Plachtaa/VALL-E-X.git

cd VALL-E-XCreate a Python virtual environment and navigate to the appropriate directory:

python -m venv vall

cd vall

cd ScriptsActivate the virtual environment:

activateGo back two levels up in the virtual environment:

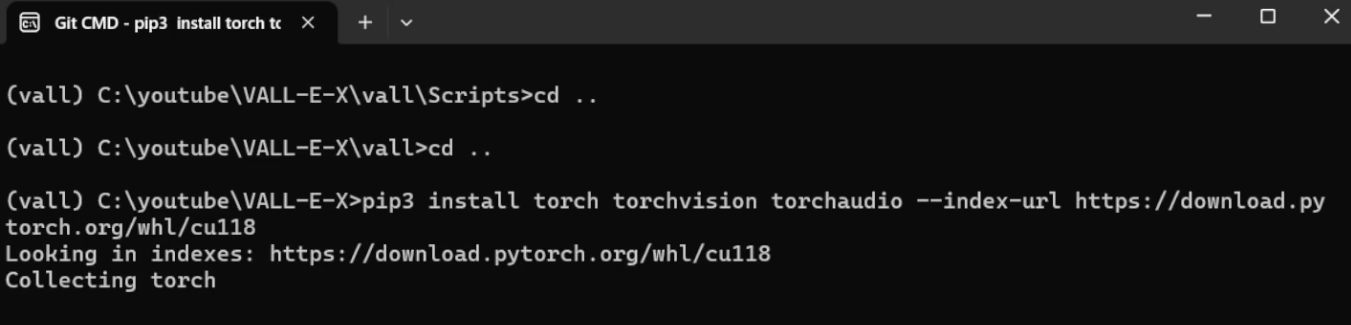

(vall) C:\youtube\VALL-E-X\vall\Scripts>cd ..

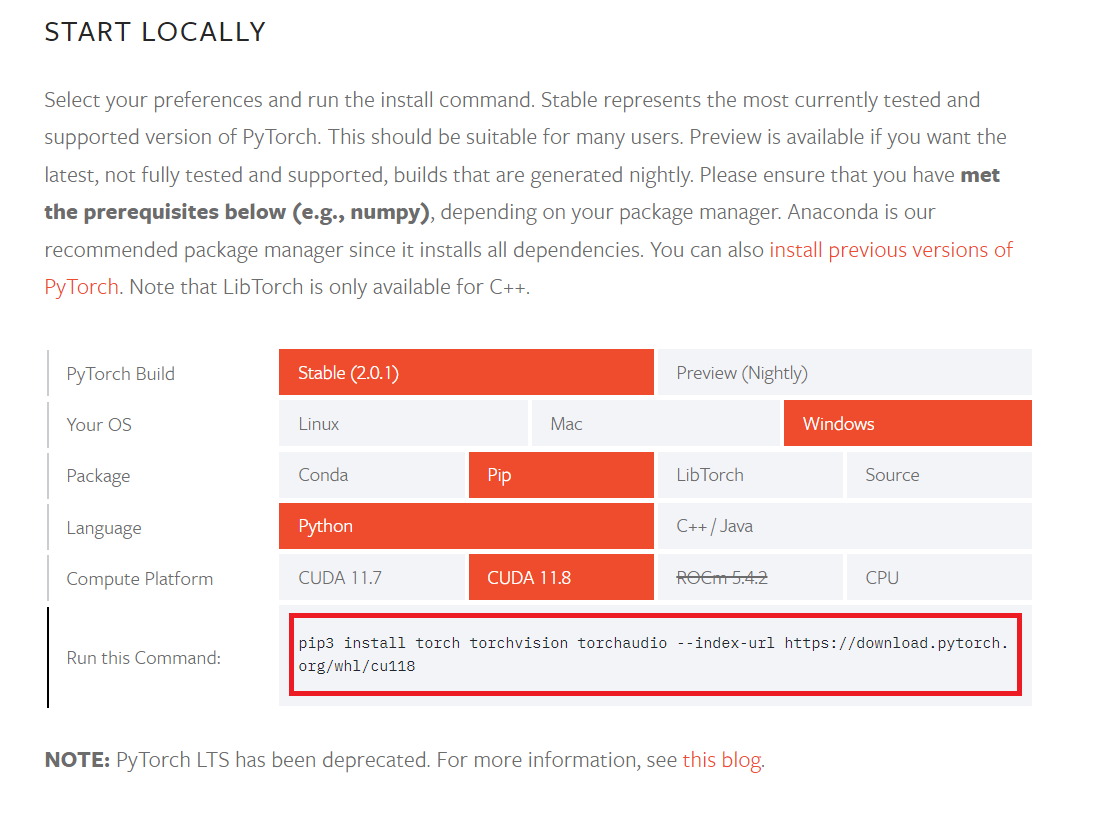

(vall) C:\youtube\VALL-E-X\vall>cd ..Install PyTorch, but this is important: I have installed CUDA Toolkit 11.8 on Windows, so the virtual environment should match it.

The following two commands serve different purposes. Understanding the purpose of each command will help you understand why both are necessary.

Install PyTorch and related packages:

(vall) C:\youtube\VALL-E-X>pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

This command installs a specific version of PyTorch and related packages. It specifies a particular PyTorch build that depends on a specific CUDA version (in this case, CUDA 11.8).

Install project dependencies:

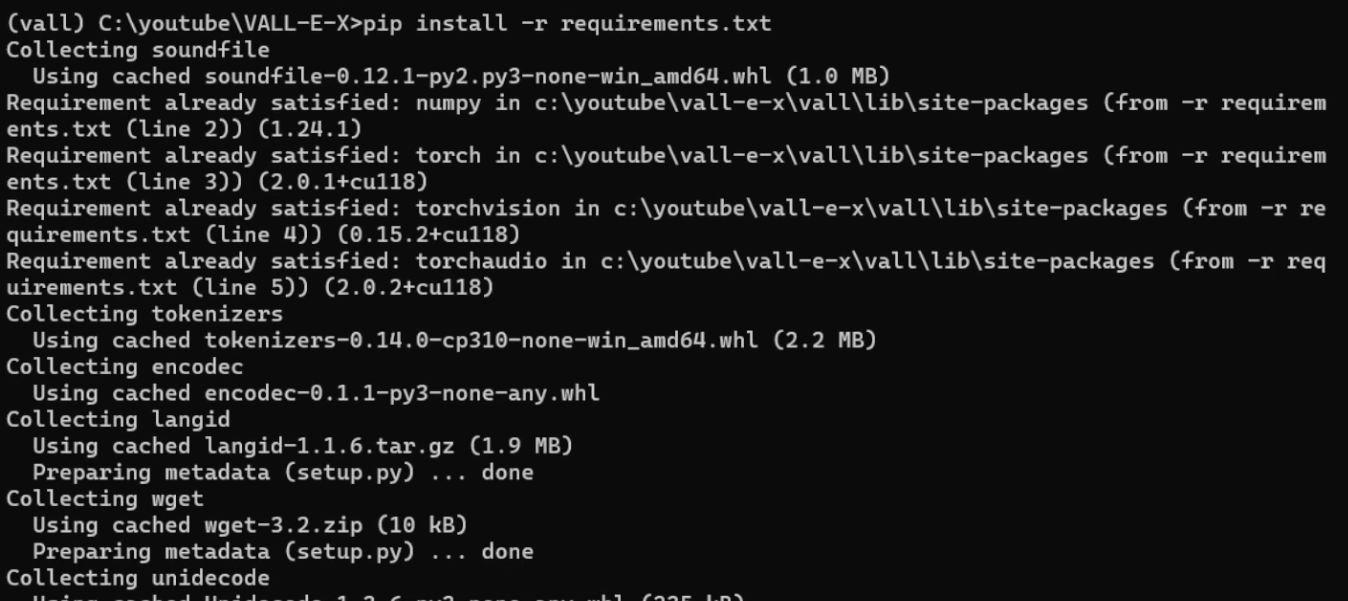

(vall) C:\youtube\VALL-E-X>pip install -r requirements.txt

This command installs all the Python packages and their specific versions required for the project to work. This may include torch, torchvision, and torchaudio, among many other packages.

Therefore, after installing the PyTorch-related packages with the first command, the second command will install the remaining project dependencies.

However, if the requirements.txt file specifies the appropriate versions of torch, torchvision, and torchaudio, the first command might be unnecessary. That is, if the version specified in requirements.txt is compatible with CUDA 11.8, the correct version will be installed without the first command.

In this case, it seems that no specific version was specified in requirements.txt, so a specific PyTorch build had to be installed first. After that, use requirements.txt to install the remaining dependencies.

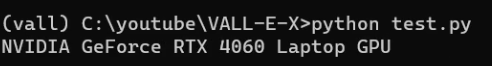

To check if the GPU is available, I created the following script, named test.py, and saved it:

import torch

# Check if CUDA is available

if torch.cuda.is_available():

# Print GPU details

print(torch.cuda.get_device_name(0))

else:

print("CUDA is not available.")Then run:

python test.py

We have verified that the software in the virtual environment can correctly utilize the host system’s GPU. Run the main script:

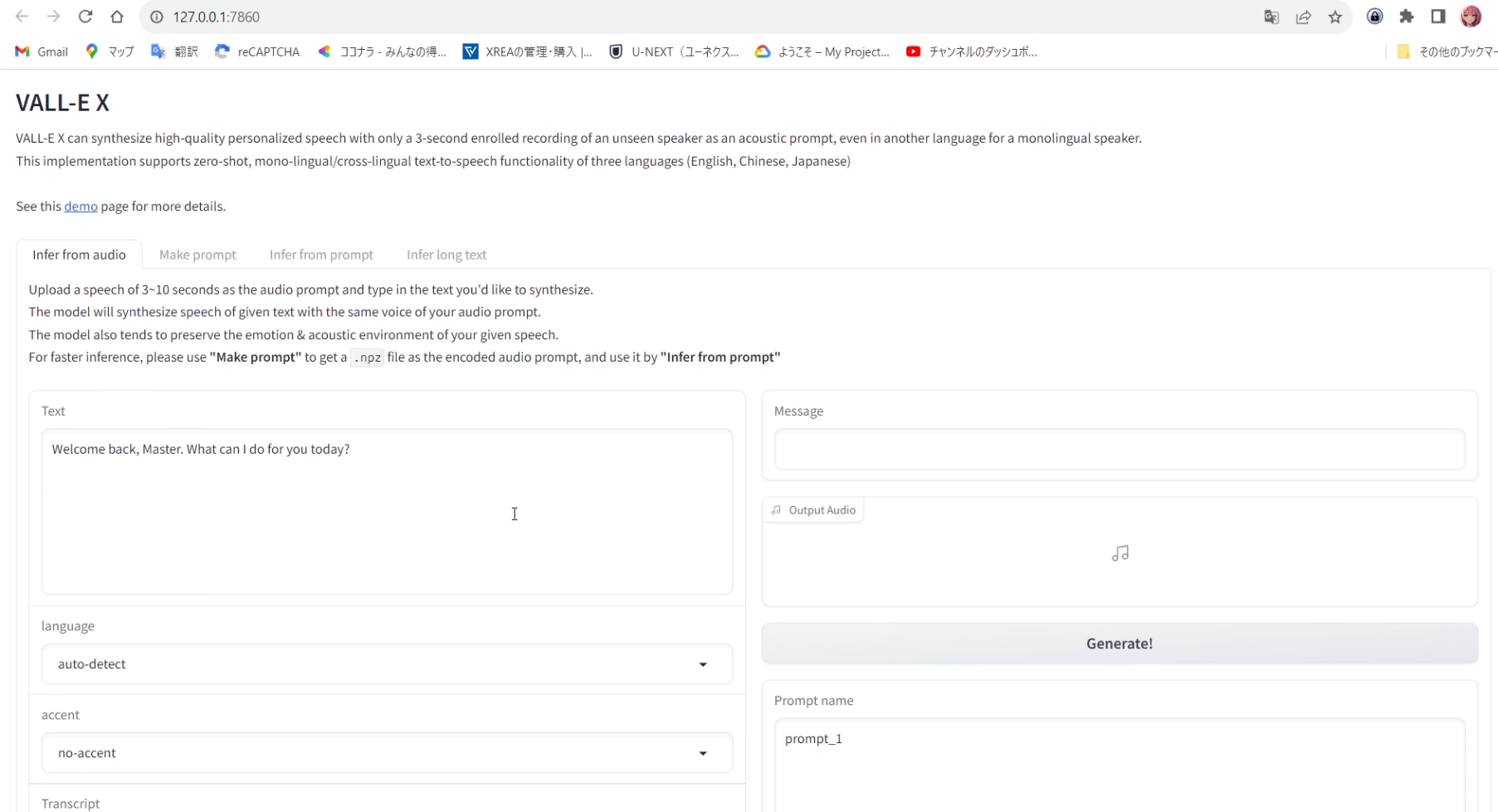

python launch-ui.pyAfter a few moments, the browser will launch and display VALL-E X.