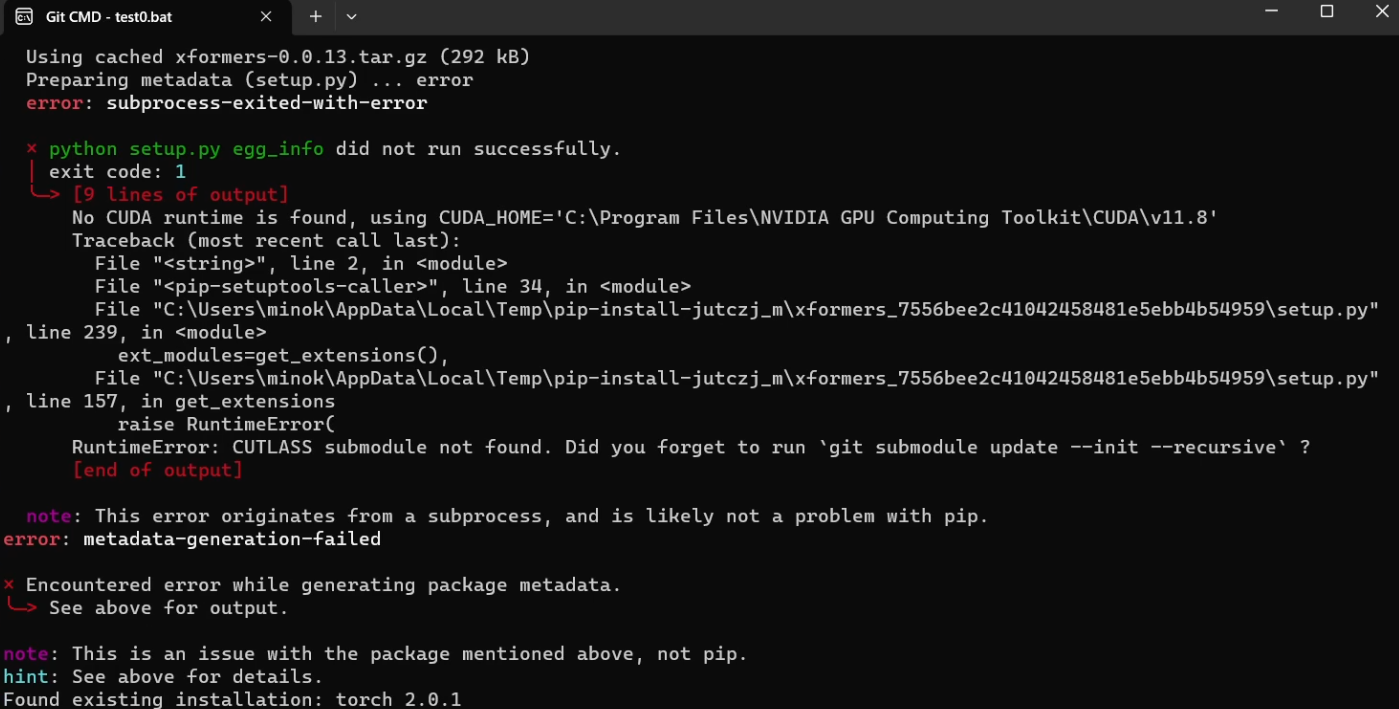

The other day, AudioCraft (MusicGen), which I had been able to use until now, stopped running with the following error.

Preparing metadata (setup.py) … error

error: subprocess-exited-with-error

× python setup.py egg_info did not run successfully.

│ exit code: 1

╰─> [9 lines of output]

No CUDA runtime is found, using CUDA_HOME=’C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.8′

Traceback (most recent call last):

File “”, line 2, in

File “”, line 34, in

File “C:\Users\minok\AppData\Local\Temp\pip-install-jutczj_m\xformers_7556bee2c41042458481e5ebb4b54959\setup.py”, line 239, in

ext_modules=get_extensions(),

File “C:\Users\minok\AppData\Local\Temp\pip-install-jutczj_m\xformers_7556bee2c41042458481e5ebb4b54959\setup.py”, line 157, in get_extensions

raise RuntimeError(

RuntimeError: CUTLASS submodule not found. Did you forget to run git submodule update –init –recursive ?

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

error: metadata-generation-failed

× Encountered error while generating package metadata.

╰─> See above for output.

note: This is an issue with the package mentioned above, not pip.

hint: See above for details.

This environment was created in a Python virtual environment.

Checking the official site, it appears that the page has changed and the recommended python version is 3.9 and not the previous 3.10.

The solution to this is to install the recommended version, but I was having trouble because some programs will not work with this version. There is a way to install multiple python versions and switch between them, but I will use Docker to achieve this. Using the recommended version of the image solves this problem and works even if the version of CUDA is different from the host.

- Environment reproducibility: Docker containers allow dependencies and application environments to be defined in code, making environments more reproducible. This makes it easy to share environments between different machines or team members and run code under the same conditions.

- Environment isolation: Because Docker containers are isolated from the host system, it is easier to maintain different environments for different projects or tasks. This avoids dependency conflicts and versioning issues.

- Portability: Docker containers can be easily moved between different operating systems and cloud platforms. This simplifies application deployment and scaling.

- Efficiency and Speed: Docker containers are lightweight and fast to launch. This increases the efficiency of deployment, testing, and continuous integration/continuous delivery (CI/CD) pipelines.

- Version Control: Docker images are version controlled, making it easy to track, roll back, and manage different versions of applications and environments.

- Efficient use of resources: Docker allows efficient sharing and utilization of resources, making more efficient use of resources on the host machine.

- Simplified development and deployment: Docker makes it easy to move applications between development, test, and production environments. This simplifies the deployment process and reduces errors.

- Community and Ecosystem: Docker has an active community and extensive ecosystem with many ready-made Docker images, Docker Compose templates, and other resources available.

So, start a Windows WSL and install Docker.

Create an appropriate directory for easier management.

mkdir audioGo to the empty directory you created and create a Dockerfile.

cd audio

sudo vi DockerfileThe contents of the Dockerfile are as follows. I encountered a lot of troubles, but it worked with these contents.

# Specify the base image

FROM python:3.9-slim-buster

# Install necessary packages

RUN apt-get update && \

apt-get install -y git ffmpeg && \

pip install torch==2.0.0+cu118 torchvision==0.15.1+cu118 torchaudio==2.0.1 --index-url https://download.pytorch.org/whl/cu118

# Clone the AudioCraft repository

RUN git clone https://github.com/facebookresearch/audiocraft /usr/src/app

# Set the working directory

WORKDIR /usr/src/app

# Install AudioCraft

RUN pip install -U .

# Start the server

CMD ["python", "./demos/musicgen_app.py", "--listen", "0.0.0.0", "--server_port", "7860"]However, since I updated the host’s CUDA version to 12.4, I needed to modify the file contents. I made the modifications by referring to the requirements.txt on GitHub. Note that the container is using CUDA 12.1.

This file was created with the assistance of Claude 3.5 Sonnet.

FROM python:3.9-slim-buster

RUN apt-get update && \

apt-get install -y \

git \

ffmpeg \

build-essential \

gfortran \

libopenblas-dev \

liblapack-dev \

python3-distutils \

python3-dev \

python3-setuptools \

python3-pip \

libsndfile1 \

pkg-config \

python3-venv \

python3-distutils-extra && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

RUN python -m pip install --no-cache-dir --upgrade pip setuptools wheel

ENV VIRTUAL_ENV=/opt/venv

RUN python -m venv $VIRTUAL_ENV

ENV PATH="$VIRTUAL_ENV/bin:$PATH"

ENV SETUPTOOLS_USE_DISTUTILS=stdlib

RUN pip install --no-cache-dir "wheel>=0.38.4"

RUN pip install --no-cache-dir "setuptools>=66.0.0"

RUN pip install --no-cache-dir "cython>=0.29.33"

RUN pip install --no-cache-dir "pybind11>=2.10.3"

RUN pip install --no-cache-dir "numpy==1.23.5"

RUN pip install --no-cache-dir \

torch==2.1.0+cu121 \

torchaudio==2.1.0+cu121 \

torchvision==0.16.0+cu121 \

torchtext==0.16.0 \

--index-url https://download.pytorch.org/whl/cu121

RUN pip install --no-cache-dir \

"av==11.0.0" \

"einops" \

"flashy>=0.0.1" \

"hydra-core>=1.1" \

"hydra_colorlog" \

"julius"

RUN pip install --no-cache-dir \

"num2words" \

"sentencepiece" \

"spacy>=3.6.1" \

"huggingface_hub" \

"tqdm" \

"transformers>=4.31.0"

RUN pip install --no-cache-dir \

"xformers<0.0.23" \

"demucs" \

"librosa" \

"soundfile" \

"gradio"

RUN pip install --no-cache-dir \

"torchmetrics" \

"encodec" \

"protobuf" \

"pesq" \

"pystoi"

RUN git clone https://github.com/facebookresearch/audiocraft /usr/src/app

WORKDIR /usr/src/app

RUN pip install --no-dependencies -e .

CMD ["python", "./demos/musicgen_app.py", "--listen", "0.0.0.0", "--server_port", "7860"]In the following case, the container is also using CUDA 12.4. However, while building the Dockerfile for CUDA 12.4, I observed that many of the downloading packages still had “12.1” in their versions.

FROM python:3.9-slim-buster

RUN apt-get update && \

apt-get install -y \

git \

ffmpeg \

build-essential \

gfortran \

libopenblas-dev \

liblapack-dev \

python3-distutils \

python3-dev \

python3-setuptools \

python3-pip \

libsndfile1 \

pkg-config \

python3-venv \

python3-distutils-extra && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

RUN python -m pip install --no-cache-dir --upgrade pip setuptools wheel

ENV VIRTUAL_ENV=/opt/venv

RUN python -m venv $VIRTUAL_ENV

ENV PATH="$VIRTUAL_ENV/bin:$PATH"

ENV SETUPTOOLS_USE_DISTUTILS=stdlib

RUN pip install --no-cache-dir "wheel>=0.38.4"

RUN pip install --no-cache-dir "setuptools>=66.0.0"

RUN pip install --no-cache-dir "cython>=0.29.33"

RUN pip install --no-cache-dir "pybind11>=2.10.3"

RUN pip install --no-cache-dir "numpy==1.23.5"

RUN pip install --no-cache-dir \

torch==2.4.0+cu124 \

torchvision==0.19.0+cu124 \

torchaudio==2.4.0+cu124 \

--index-url https://download.pytorch.org/whl/cu124

RUN pip install --no-cache-dir torchtext==0.17.0

RUN pip install --no-cache-dir "transformers==4.31.0"

RUN pip install --no-cache-dir \

"av==11.0.0" \

"einops" \

"flashy>=0.0.1" \

"hydra-core>=1.1" \

"hydra_colorlog" \

"julius"

RUN pip install --no-cache-dir \

"num2words" \

"sentencepiece" \

"spacy>=3.6.1" \

"huggingface_hub" \

"tqdm"

RUN pip install --no-cache-dir \

"xformers==0.0.22" \

"demucs" \

"librosa" \

"soundfile" \

"gradio"

RUN pip install --no-cache-dir \

"torchmetrics" \

"encodec" \

"protobuf" \

"pesq" \

"pystoi"

RUN git clone https://github.com/facebookresearch/audiocraft /usr/src/app

WORKDIR /usr/src/app

RUN pip install --no-dependencies -e .

CMD ["python", "./demos/musicgen_app.py", "--listen", "0.0.0.0", "--server_port", "7860"]The reason why some packages are still downloading CUDA 12.1 versions while building the Dockerfile for CUDA 12.4 can be attributed to the following:

- Version Compatibility Dependencies

- Many deep learning libraries require specific CUDA versions of PyTorch as direct dependencies

- For example, xformers and other libraries might internally require torch==2.1.0+cu121

- Binary Compatibility

- CUDA 12.4 binaries are relatively new, and not all libraries have adapted yet

- Many libraries provide CUDA 12.1 binaries as their stable version

- CUDA 12.4 maintains binary compatibility with 12.1, so 12.1 binaries work without issues

- Stability Considerations

- CUDA 12.1 is widely used and thoroughly tested

- Many libraries conduct their official testing with CUDA 12.1

Possible solutions include:

- Explicit Version Specification

# Explicitly specify versions

RUN pip install --no-cache-dir \

torch==2.4.0+cu124 \

--index-url https://download.pytorch.org/whl/cu124 \

--no-deps

- Dependency Organization

# Install dependencies individually to avoid conflicts

RUN pip install --no-cache-dir \

packageA==version \

--no-deps

- Environment Variable Configuration

# Specify CUDA version through environment variables

ENV CUDA_VERSION=12.4

ENV TORCH_CUDA_ARCH_LIST="8.0;8.6;8.9"

However, at present, it’s acceptable for some packages to use CUDA 12.1 binaries because:

- CUDA 12.4 maintains backward compatibility with 12.1

- Performance impact is minimal

- System stability is maintained

In the future, we expect more libraries to fully support CUDA 12.4, enabling the creation of pure CUDA 12.4 environments.

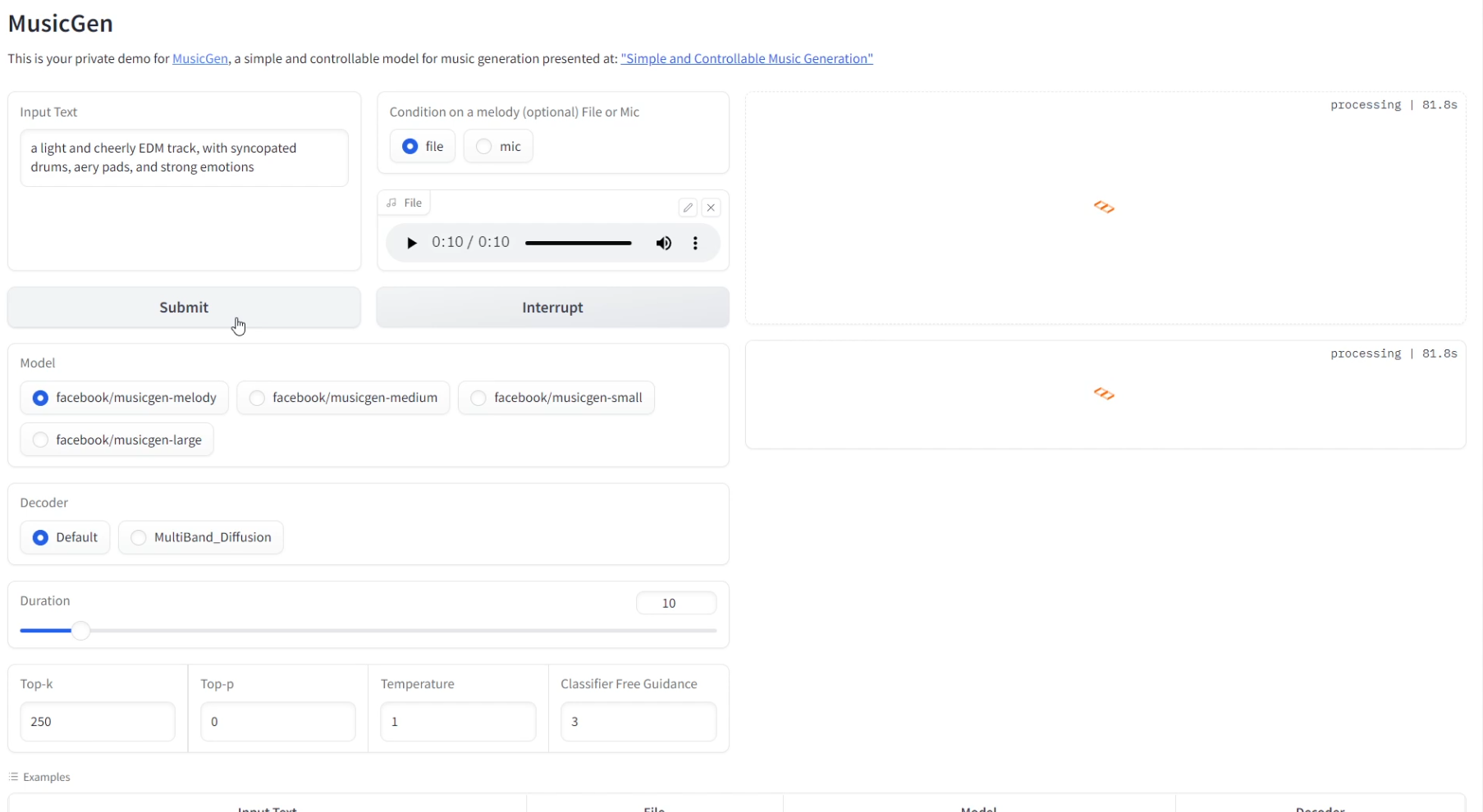

Build a Docker image based on the created Dockerfile.

docker build -t audiocraft .Next, run the container.

docker run -d --name audio -p 7860:7860 audiocraftCheck the WSL IP address.

ip aAfter confirming the IP address, access it with a browser.

http://IP address:7860

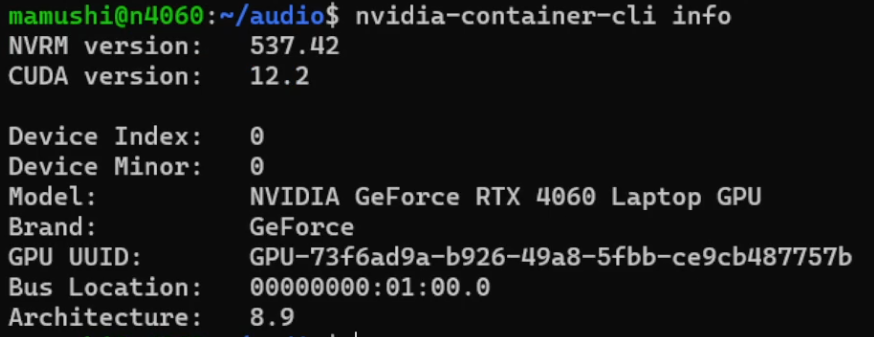

To use a GPU with Docker, install the NVIDIA Container Toolkit.

If you have installed it correctly, the following command will show you the information.

nvidia-container-cli infomamushi@n4060:~/audio$ nvidia-container-cli info

NVRM version: 537.42

CUDA version: 12.2

Device Index: 0

Device Minor: 0

Model: NVIDIA GeForce RTX 4060 Laptop GPU

Brand: GeForce

GPU UUID: GPU-73f6ad9a-b926-49a8-5fbb-ce9cb487757b

Bus Location: 00000000:01:00.0

Architecture: 8.9

The previous docker run command uses CPU, so stop the container and remove it.

docker stop audio

docker rm audioStart the container with the following command

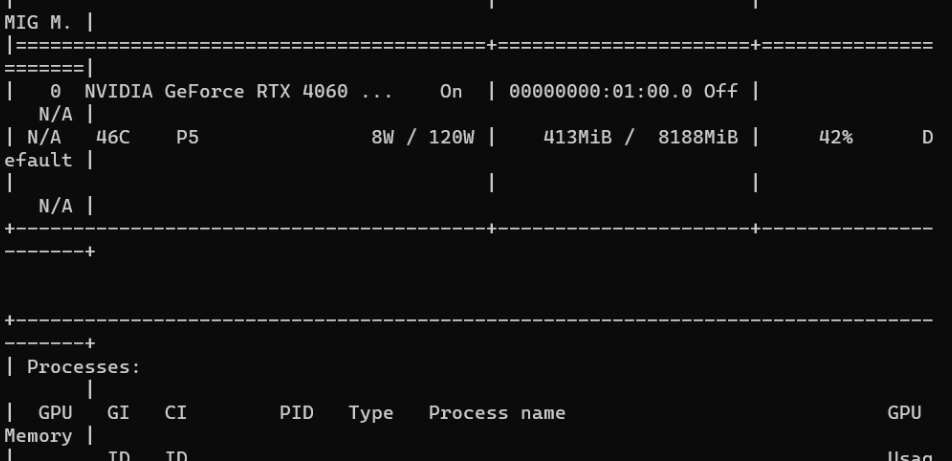

docker run --name audio --gpus all -p 7860:7860 -d audiocraftCheck the GPU usage while creating a song in the browser’s web UI.

nvidia-smi

The nvidia-smi command allows you to check GPU status and usage directly from within WSL. This will give you detailed information such as

- Basic GPU information: View basic information about the GPU, such as name, ID, and driver version.

- GPU utilization: Check GPU utilization, memory usage, and other resource usage.

- Running Processes: See which processes are running on the GPU and how much GPU resources they are consuming.

- Temperature and Power: Check GPU temperature and power consumption.

- Errors and Warnings: Check for GPU-related errors and warnings.

This information is very useful for monitoring and troubleshooting GPU performance and health. In addition, nvidia-smi commands are updated in real time, providing real-time feedback and a more accurate picture of GPU behavior.

Windows Task Manager, on the other hand, only provides basic GPU utilization and memory usage, and does not provide as much detail as nvidia-smi. Therefore, if you need more detailed GPU status and usage information, it is recommended that you use the nvidia-smi command.