Previously, I introduced how to use Whisper Speech with Jupyter Notebook.

WhisperSpeech: An Advanced Text-to-Speech Conversion Tool

WhisperSpeech is a powerful tool that leverages cutting-edge speech synthesis technology to transform written text into natural-sounding speech. This article explores its capabilities and provides a comprehensive setup guide.

Key Features of WhisperSpeech

Text-to-Speech Conversion

WhisperSpeech excels at converting input text into high-quality speech using your selected voice profile. This feature is ideal for creating narrations, audiobooks, or any voice-based content.

Voice Cloning Capabilities

One of WhisperSpeech’s most impressive features is its ability to generate speech that mimics a specific person’s voice by analyzing a voice sample. This allows you to synthesize new text in that particular voice with remarkable accuracy.

Emotional Expression

WhisperSpeech can infuse synthesized speech with various emotions such as joy, sadness, or surprise, creating more engaging and natural-sounding audio content.

Real-Time Synthesis

The tool performs text-to-speech conversion in real-time, making it suitable for applications requiring immediate speech output and interactive experiences.

Custom Voice Model Creation

Users can train their own voice models tailored to specific purposes, allowing for highly customized speech synthesis solutions.

Speech Speed Adjustment

WhisperSpeech offers precise control over the speed of synthesized speech, enabling natural delivery or matching specific timing requirements for your projects.

Speech Style Customization

Fine-tune the pitch, tone, and other voice characteristics to create truly customized speech that fits your exact requirements.

By harnessing these powerful features, WhisperSpeech can be utilized in numerous scenarios including content creation, entertainment, education, and accessibility applications. The voice cloning function and emotional expression capabilities, in particular, make it an exceptionally versatile tool.

Why Speech Results Vary

Even when processing the same text multiple times, WhisperSpeech may produce slightly different results. Here’s why this happens:

1. Intentional Randomness

Modern speech synthesis systems deliberately introduce slight variations in intonation, timing, and pronunciation. This randomness is designed to make the synthesized speech sound more natural and human-like, avoiding the robotic quality of perfectly consistent output.

2. Model Uncertainty

Speech synthesis models rely on complex statistical algorithms that consider multiple possible outputs when generating speech. This inherent uncertainty in the model leads to subtle variations in the output, particularly noticeable in models that incorporate emotional expression and style customization.

3. Complex Internal Processing

The speech synthesis engine performs extremely sophisticated processing when converting text to speech. The algorithms and acoustic models may select different parameters based on various factors, contributing to slight variations in the generated speech.

4. Output Format and Encoding Differences

The format and encoding settings used when saving the audio can affect sound quality. Even with identical model parameters, the final output may vary depending on the specific compression algorithms and encoding methods employed.

5. System Resource Allocation

The state of your system’s resources (CPU, GPU, memory) can impact speech generation. If your system is under heavy load, the synthesis process might not execute in real-time, resulting in timing differences or quality variations.

These factors combine to create slight differences in each synthesis attempt, even with identical input text. While this variability makes the speech sound more natural and human-like, it also explains why generating perfectly identical speech repeatedly can be challenging.

Setting Up WhisperSpeech

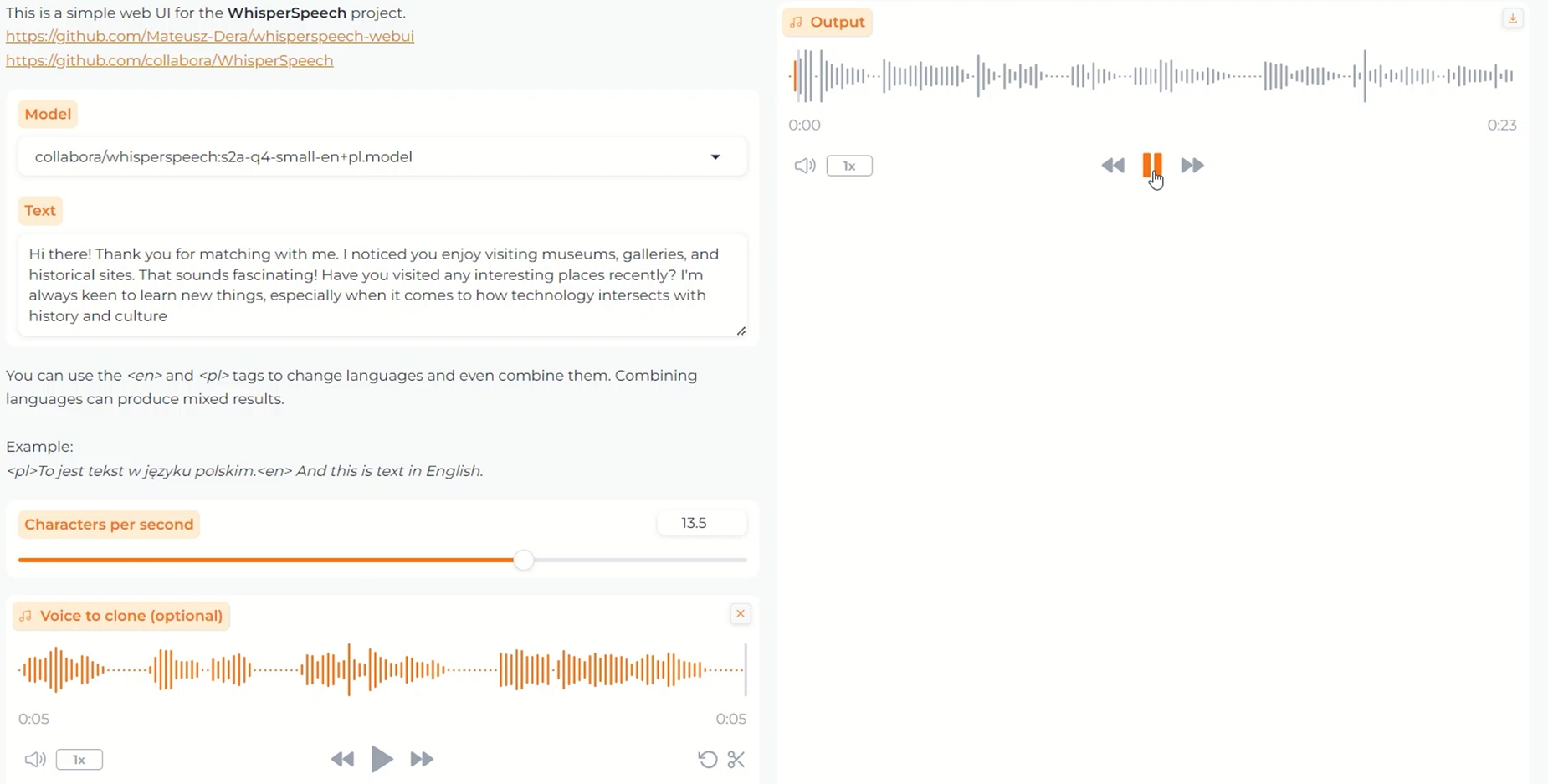

In this section, I’ll guide you through setting up WhisperSpeech via a browser interface similar to Stable Diffusion WebUI. We’ll be using the following GitHub repository as our reference:

Understanding Environment-Specific Settings

Before we begin, let’s clarify some environment-specific settings mentioned in the repository:

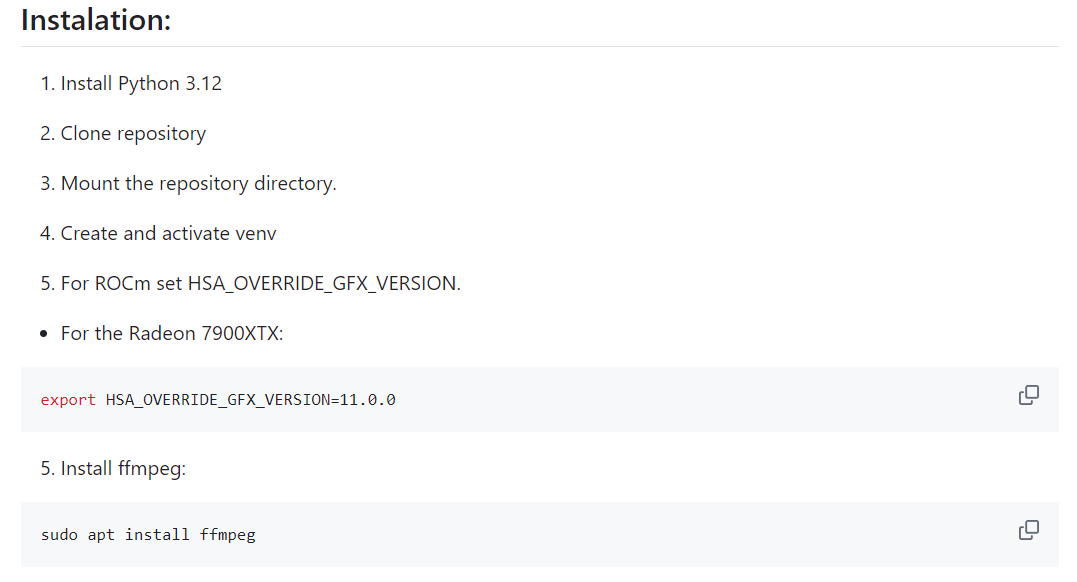

GPU Configuration: HSA_OVERRIDE_GFX_VERSION

You might notice the following command in the repository:

export HSA_OVERRIDE_GFX_VERSION=11.0.0

This setting specifically applies to AMD GPUs and has no relevance for NVIDIA GPUs. I’m using an NVIDIA GeForce RTX 4060 GPU, so this setting isn’t applicable in my case.

Important clarification:

- The

HSA_OVERRIDE_GFX_VERSIONenvironment variable is exclusively for AMD GPU users - If you’re using an NVIDIA GPU (like the RTX 4060), you can safely ignore this setting

- This command will have no effect on NVIDIA systems and can be omitted from your setup process

- If you find this in a script or configuration file, it’s likely intended for AMD users but won’t cause any issues on your NVIDIA system

FFmpeg Installation

Another command you’ll encounter is:

sudo apt install ffmpegThis is the installation command for FFmpeg, a crucial component for audio processing. However, this command structure is specific to Linux-based systems:

sudogrants administrative privileges in Linux/macOSaptis the package manager for Debian-based Linux distributions (like Ubuntu)

If you’re using Windows with a Python virtual environment, these commands won’t work directly. You’ll need to consider one of the following approaches:

Choosing Your Setup Environment

Option 1: Windows Subsystem for Linux (WSL)

WSL provides a Linux-compatible environment on Windows, allowing you to:

- Run Linux commands and tools seamlessly

- Use Linux package managers like

apt - Access Windows files from within the Linux environment

- Maintain integration with your Windows system

WSL is an excellent choice for Windows users who need Linux capabilities without the complexity of a full virtual machine or dual-boot setup. It’s particularly convenient for development work that requires Linux-specific tools like the ones needed for WhisperSpeech.

Option 2: Native Linux Environment

A full Linux installation (either on dedicated hardware, dual-boot, or virtual machine) provides:

- Complete access to all Linux features and capabilities

- Potentially better performance for resource-intensive tasks

- No Windows/Linux integration concerns

Option 3: Virtual Machines

You could also run Linux in a virtual machine using:

- VirtualBox or VMware for CPU-only processing

- Proxmox for configurations that support GPU passthrough

My Recommendation

For this tutorial, I’ll be using WSL despite my physical Linux machine not being high-spec. WSL provides the perfect balance of:

- Linux command-line capabilities

- Easy integration with Windows

- Sufficient performance for our needs

If you’re planning to run WhisperSpeech with CPU only (no GPU acceleration), VirtualBox or VMware would also be viable options.

In the next section, we’ll set up our Python environment using pyenv, which allows for easy Python version management. While my examples will use WSL, the same basic principles apply to a physical Ubuntu machine.

Setting Up the Python Environment

Now let’s configure the proper Python environment for WhisperSpeech. Following the recommended settings will help avoid compatibility issues and ensure smooth operation.

Checking Your Current Python Version

First, let’s verify which Python version is currently installed on your system:

python3 -VThis command displays your current Python version. For example, you might see:

Python 3.12.3

The GitHub repository recommends Python 3.12.0. While slightly newer versions like 3.12.3 might work, it’s generally safer to use the exact recommended version, especially with projects that have specific dependency requirements. Using the same version as recommended helps prevent unexpected compatibility issues.

Cloning the WhisperSpeech WebUI Repository

Let’s start by cloning the project repository from GitHub:

git clone https://github.com/Mateusz-Dera/whisperspeech-webui.gitThis command creates a new directory named whisperspeech-webui and downloads all the project files into it. Git cloning is the standard practice for obtaining code from GitHub repositories.

Now, navigate into the project directory:

cd whisperspeech-webuiInstalling the Recommended Python Version with pyenv

We’ll use pyenv to manage multiple Python versions on our system. This tool allows you to easily switch between different Python versions for different projects.

To install the recommended Python 3.12.0:

pyenv install 3.12.0This command downloads and installs Python 3.12.0 through pyenv without affecting your system’s default Python installation.

Setting the Local Python Version

Once installed, we need to configure the project directory to use this specific Python version:

pyenv local 3.12.0This creates a .python-version file in the current directory, instructing pyenv to automatically use Python 3.12.0 whenever you’re working in this directory. This ensures the correct Python version is used for WhisperSpeech without affecting other projects on your system.

Verifying the Python Version

To confirm that the correct Python version is now active:

pyenv versionYou should see output similar to:

3.12.0 (set by /home/username/whisperspeech-webui/.python-version)

This confirms that Python 3.12.0 is correctly set for this directory.

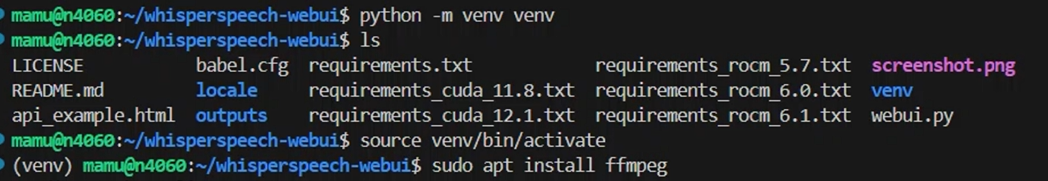

Creating a Virtual Environment

Next, let’s create a Python virtual environment to isolate our project dependencies:

python -m venv venvThis command creates a new directory named venv that contains an isolated Python environment. Using virtual environments is a best practice that keeps each project’s dependencies separate, preventing conflicts between different projects.

Activating the Virtual Environment

To activate the virtual environment:

source venv/bin/activateAfter activation, your command prompt will change, typically showing the virtual environment name in parentheses at the beginning of the prompt. This indicates that you’re now working within the isolated environment where any packages you install will be contained within this project.

Understanding File Path Differences Between Windows and Linux/WSL

An important note for beginners: Windows and Linux (including WSL) use different syntax for file paths:

Windows Path Format:

- Uses backslashes (

\) as separators - Example:

C:\Users\Username\Documents\ - Virtual environment activation:

venv\Scripts\activate

Linux/WSL Path Format:

- Uses forward slashes (

/) as separators - Example:

/home/username/documents/ - Virtual environment activation:

source venv/bin/activate

Always use the correct format for your current environment. When following this guide in WSL, use the Linux-style paths with forward slashes.

In the next section, we’ll install the necessary dependencies including FFmpeg and CUDA support to enable high-performance speech synthesis.

Installing Dependencies and Running WhisperSpeech

With our Python environment properly configured, let’s install the necessary dependencies and get WhisperSpeech up and running.

Installing FFmpeg

FFmpeg is an essential tool for processing audio and video files. WhisperSpeech relies on FFmpeg for audio conversion and manipulation.

To install FFmpeg in your WSL environment:

sudo apt install ffmpegWhat is FFmpeg?

FFmpeg is a powerful multimedia framework capable of decoding, encoding, transcoding, muxing, demuxing, streaming, filtering, and playing virtually any type of media. For WhisperSpeech, it handles:

- Audio format conversion

- Audio stream processing

- Encoding and decoding operations

- Sample rate adjustments

- Other essential audio manipulations

Why we use the apt package manager

The apt command is the package management system for Debian-based Linux distributions (including Ubuntu and the default WSL environment). It handles downloading, installing, and managing software packages along with their dependencies.

Important note for Windows users: This command works only in Linux environments (including WSL), not in standard Windows Command Prompt or PowerShell. This is one of the primary reasons we recommended using WSL for this project.

Installing CUDA and Related Dependencies

To leverage your NVIDIA GPU for accelerated speech processing, we’ll install CUDA and the required Python packages:

pip install -r requirements_cuda_12.1.txtUnderstanding CUDA

CUDA (Compute Unified Device Architecture) is NVIDIA’s parallel computing platform and API model. It allows developers to use NVIDIA GPUs for general-purpose processing, dramatically accelerating compute-intensive applications.

For WhisperSpeech:

- GPU acceleration can reduce processing time by orders of magnitude compared to CPU-only processing

- Complex neural network operations run significantly faster

- Real-time speech synthesis becomes more feasible even with complex models

The requirements file explained

The requirements_cuda_12.1.txt file contains a list of all Python packages needed to run WhisperSpeech with CUDA 12.1 support. This includes:

- PyTorch with CUDA support

- Audio processing libraries

- Neural network frameworks

- WhisperSpeech-specific dependencies

Using this file with pip ensures all packages are installed with the correct versions and configurations for compatibility with CUDA 12.1.

Note: If you’re using a different CUDA version or don’t have a compatible GPU, the repository may provide alternative requirements files (check the GitHub repository for options like requirements_cpu.txt for CPU-only setups).

Launching WhisperSpeech WebUI

Once all dependencies are installed, launching WhisperSpeech is straightforward:

python webui.pyThis command starts the WhisperSpeech web interface. After initialization, you’ll typically see a message indicating the server is running and providing a URL (usually something like http://127.0.0.1:7860).

Understanding the Web Interface

WhisperSpeech’s web interface operates similarly to Stable Diffusion WebUI:

- It provides an intuitive browser-based control panel

- No need to write code or use command-line arguments

- Options for text input, voice selection, and generation settings

- Direct audio playback in the browser

- Download options for generated speech

First-time Launch Considerations

On the first run, WhisperSpeech may need to download additional model files. This is normal and may take some time depending on your internet connection. These files will be cached for future use.

Why WSL is Ideal for This Project

As mentioned earlier, we recommend using WSL (Windows Subsystem for Linux) for this project, especially for Windows users. Here’s a deeper explanation of why:

1. Linux Package Management

Tools like FFmpeg are easier to install and manage through Linux package systems. The apt command provides a simple one-line installation that handles all dependencies automatically.

2. Consistent Path Handling

Linux’s path handling is more straightforward for Python projects. Issues with backslashes, spaces in paths, and permission structures that often plague Windows setups are less problematic in Linux environments.

3. CUDA Integration

While CUDA works on both Windows and Linux, many AI frameworks and tools (including those used by WhisperSpeech) have more stable and better-optimized Linux implementations for CUDA.

4. Best of Both Worlds

WSL provides Linux capabilities while still allowing easy access to your Windows file system and applications. You can edit files with Windows tools while running Linux commands for the processing tasks.

Accessing Your Generated Audio

When you generate speech with WhisperSpeech, the output files will typically be saved in an outputs directory within your project folder. From WSL, you can access Windows tools to play or edit these files if needed.

For a visual demonstration of WhisperSpeech in action, you can check out this YouTube video: WhisperSpeech Demonstration

Advanced WhisperSpeech Usage

Now that you have WhisperSpeech up and running, let’s explore some advanced usage scenarios and tips to get the most out of this powerful tool.

Fine-tuning Voice Models

WhisperSpeech allows you to fine-tune existing models to better match specific voice characteristics:

- Collect high-quality voice samples – 5-10 minutes of clear audio from your target voice

- Prepare properly formatted training data – audio files with corresponding text transcriptions

- Set appropriate training parameters – balance between training time and model quality

- Monitor for overfitting – ensure the model generalizes well beyond training samples

Creating Multi-speaker Systems

For projects requiring multiple distinct voices:

- Train separate models for each voice type

- Use speaker embeddings to differentiate between voices

- Create a frontend system for voice selection in your application

Optimizing Audio Quality

For the best possible output quality:

- Use lossless audio formats for training data (WAV or FLAC)

- Ensure consistent audio levels and recording conditions

- Consider post-processing tools for noise reduction and normalization

- Experiment with different sampling rates (higher isn’t always better)

Resource Management

Speech synthesis can be resource-intensive. To optimize performance:

- CPU usage: Set appropriate batch sizes based on your system’s capabilities

- GPU memory: Monitor VRAM usage and adjust model complexity if needed

- Disk space: Generated models and audio files can consume significant storage

- Caching: Enable model caching to avoid reloading between sessions

Integration with Other Tools

WhisperSpeech works well within larger workflows:

- Content pipelines: Automate text-to-speech for content production

- Interactive applications: Use WebSockets or REST APIs for real-time synthesis

- Accessibility tools: Integrate with screen readers or text-based interfaces

- Creative platforms: Combine with music or video production software

Common Troubleshooting

CUDA Installation Issues

If you encounter CUDA-related errors:

- Verify your NVIDIA drivers are up to date

- Ensure your GPU is CUDA-compatible

- Check that the CUDA version matches your driver version

- Try using

nvidia-smicommand to confirm GPU visibility

Python Environment Problems

For Python-related issues:

- Confirm virtual environment activation (prompt should show

(venv)) - Verify Python version matches the recommended version

- Try reinstalling dependencies with

pip install -r requirements_cuda_12.1.txt --force-reinstall - Check for conflicting packages with

pip list

Audio Processing Errors

If you experience audio output problems:

- Verify FFmpeg installation with

ffmpeg -version - Check input text for special characters or formatting issues

- Try simpler test phrases to isolate the problem

- Examine log output for specific error messages

Keeping Up With WhisperSpeech Development

WhisperSpeech is an actively developed project. To stay current:

- Regularly check the GitHub repository for updates

- Join relevant communities on Discord or Reddit

- Watch for new model releases or technique improvements

- Consider contributing to the open-source project if you have skills to offer

Ethical Considerations

As with any voice synthesis technology, WhisperSpeech raises important ethical considerations:

- Consent: Obtain permission before cloning someone’s voice

- Transparency: Be clear when using synthetic voices in content

- Responsible use: Avoid creating misleading or potentially harmful content

- Privacy: Secure voice data and models appropriately

Conclusion

WhisperSpeech represents an impressive advancement in text-to-speech technology, making high-quality voice synthesis accessible to a wider audience. By following this guide, you now have a powerful tool for creating natural-sounding speech for various applications.

The combination of voice cloning capabilities, emotional expression, and customization options makes WhisperSpeech suitable for everything from content creation to accessibility tools. As with any powerful technology, using it responsibly and ethically is essential.

Whether you’re developing an application, creating content, or simply experimenting with AI voice technology, WhisperSpeech offers a flexible and powerful platform for your projects.

For a visual demonstration and more examples of WhisperSpeech in action, check out the video tutorial I’ve created:

WhisperSpeech Demonstration and Tutorial

Happy voice creating!